Pure play small cap bet on making GPU data centres more efficient

One of the biggest Risk/Reward. A bet on HBM alternative and powering AI workloads in HPC data centres

We are in the midst of a paradigm shift that usually occurs once in 20 years. More demands for intelligence tokens is leading to an absolute inflection in demand for inference!!

Yes Yes. We all know Data centre build up is critical and is growing fast, but tell me where is the capex going to go next.?

We are not going to be able to satisfy the demand for test time compute in the forseeable future. That’s why every major breakthrough in Inference Data centre optimization is worth a look.

I came across this stock while looking to solve the current bottle necks in the AI GPU data centres. But before I dive into that

What are the current biggest problems in AI Data centres?

1.Not enough bandwidth between the memory and GPU, which leads to very expensive GPU’s idling around 60% of the time according to META

2. Excessive heating, or excessive power requirements.

I will explore opportunities in 2. in a future posts. For now, Lets focus on 1 for this opportunity. Here are some excerpts from META and experts claiming how they have been battling GPU idling

” When thousands of H100s work on the same job, the job can stall if even one GPU falls behind because it is starved for data. We have seen utilization dip well below 50-60 percent when network or memory bandwidth cannot keep up.” - Meta Engineering blog “Building Meta’s GenAI Infrastructure” on 12 Mar 2024

"At cluster scale, HBM and network bandwidth, not flops, limit throughput. If input pipelines are not optimized, expensive accelerators end up idling for more than half the step "

- Meta Engineering blog “Maintaining large-scale AI capacity at Meta" 12 June 2024

“GPU stalls caused by HBM bandwidth starvation were a top contributor to the 419 interruptions we tracked during 54 days of training.”

- Meta Engineering blog “RoCE networks for distributed AI training at scale

While compute capabilities have scaled exponentially, memory bandwidth has only improved by factors of 1.4-1.6 every two years. This creates a "memory wall" where AI workloads become increasingly memory-bound rather than compute-bound.

For this exact reason, High bandwidth Memory (HBM) was the talk of the town in 2022,2023,2024. Everybody and their grandma has been talking about investing into HBM.

But HBM’s have a problem,

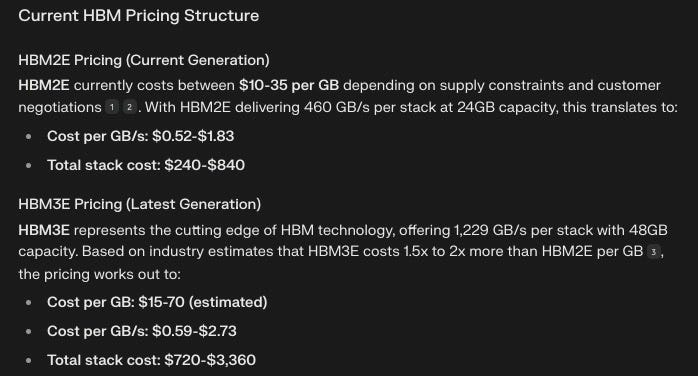

HBM is extremely expensive. Has a unit price of $10-20 per GB and costs approximately 5 times more than DDR5 memory. The NVIDIA H100, for example, requires 80GB of HBM2E costing around $1,600 out of the total $3,000 system cost. This high cost is driven by complex 3D stacking technology, silicon interposers, and low yields (only 40-60% for HBM3e).

But, what if there was another way to improve the efficiency in GPU utilization? Could there be another way to crack the limiting factor in the data centre when it comes to bandwidth and efficiency?

Let’s analyze the trends.

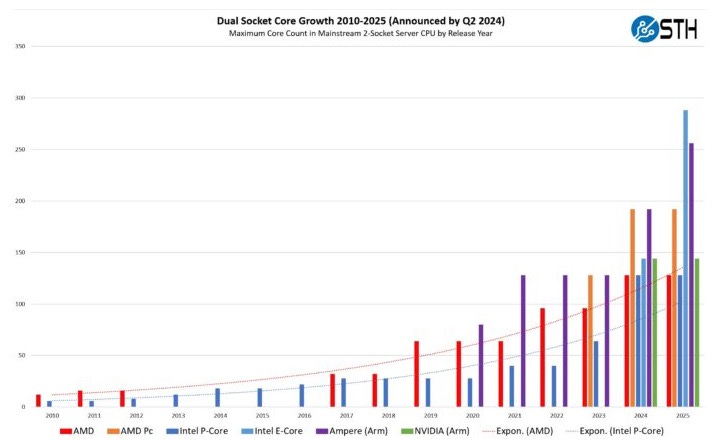

Top-end server CPU core counts have grown markedly from under 32 cores a decade ago to over 128 cores today, with projections of parts exceeding 200 cores by Q1 2025. Driven largely by the need to handle AI training, inference, and large-scale data processing workloads

Intel's Xeon 6 (P-core “Granite Rapids” and “Sierra Forest”): Intel has released server CPUs with up to 144 and 128 cores, explicitly targeting AI and data center workloads. These go volume and in production in summer 2025

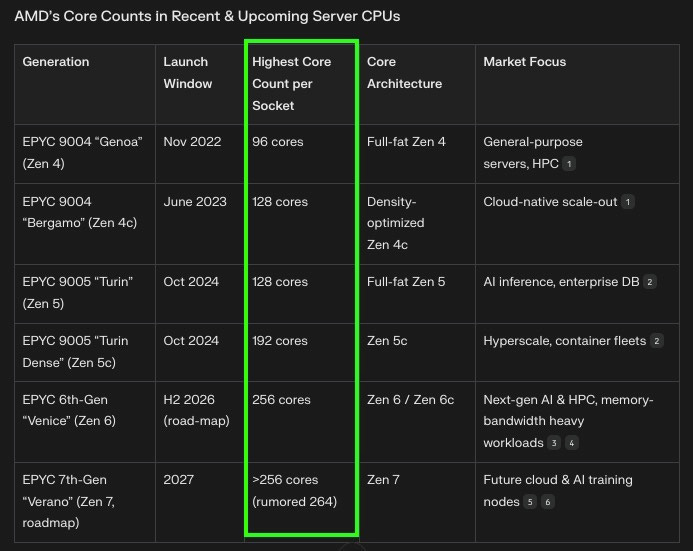

AMD EPYC 6th Generation VENYC: AMD's latest server processors feature up to 256 cores, Next-gen AI & HPC, memory-bandwidth heavy workloads

Industry analyst reports: Gartner and IDC note that AI and big data workloads are key drivers for increasing core counts in server CPUs to improve parallelism and throughput.

Cloud providers' hardware upgrades: Major cloud providers like AWS and Microsoft Azure are deploying multi-core CPUs to support AI model training and big data processing.

So the point is, tech is getting good to utilize higher number of cores efficiently to serve AI inference workloads

Okay, but wait, so what’s the catch? What do these increased CPU cores want in return? Why don’t we scale to 2343582434943 numbers of cores tomorrow already?

So, each core launches dozens of outstanding memory requests; if the memory channel cannot deliver enough bytes fast enough, cores stall, while waiting for data.

More cores more money(memory) problems.

Here is the thing that hasn’t yet become so obvious to the market yet. The next logical step in the evolution for memory is called as MRDIMM.

What is MRDIMM?

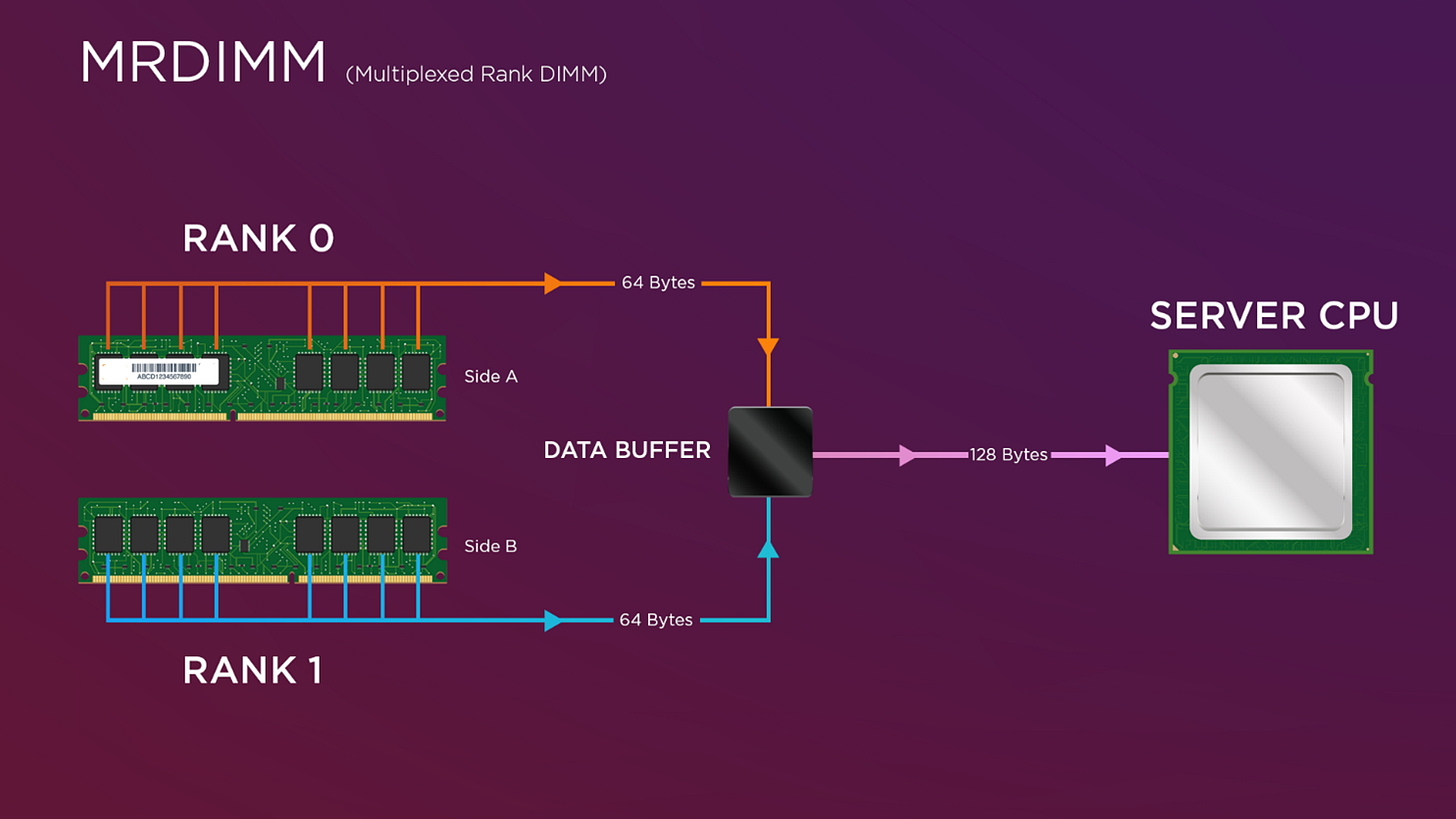

Multiplexed Rank Dual In-line Memory Module, is an evolution of the standard DDR5 server memory module architecture designed to double memory throughput and increase capacity per DIMM slot without altering the physical interface.

If you want to understand why MRDIMM is the future. Check out this article from Irrational investor that is thoroughly researched and provides a great technical reasoning.

Okay, so lets keep it simple and follow the bread crumbs

More reasoning —> more test time compute —> more data centres for inference —> more cores inside the servers —> more efficiency required —> more memory —> more MRDIMMLet me list all the reasons why MRDIMM is the next logical step as an alternative to HBM’s, especially for inference

1. Immediate Bandwidth Relief for AI-Heavy Servers

Double-speed jump without new sockets. First-generation Multiplexed Rank DIMMs (MRDIMMs) take standard DDR5 DRAM that tops out near 6,400 MT/s and deliver it to the CPU at 8,800 MT/s, a ~40% effective uplift over today’s fastest RDIMMs

MRDIMM provides double bandwidth and density on the same RAM stick.

MRDIMMs act like two fake memory channels on one stick, saving motherboard space

Gen1 MRDIMM runs at 8800MT/s effective, Gen2 will hit 12800M

Road-mapped to 12,800 MT/s (Gen 2) in 2025 shipments. Chipset vendors have already sampled 12.8 Gb/s parts, extending bandwidth per channel by another ~45% over Gen 1

These uplifts directly target the biggest bottleneck in large-model inference and memory-bound HPC codes, where GPUs or many-core CPUs idle while waiting for data.

2. Much Cheaper Path than Switching to HBM or CXL

Leverages existing DDR5 ecosystem. MRDIMM keeps the same DIMM slot and PCB routing, so OEMs can drop-in upgrade future Intel Granite Rapids and Xeon 6 platforms without the costly board redesign that HBM-based solutions

Lower $/GB than HBM. Tall-form-factor (TFF) MRDIMMs raise capacities to 256 GB per module using commodity DRAM; HBM3 still carries a multi-fold cost premium and is limited in capacity per stack. Check this incredible resource

3. Demand Pull from CPU Vendors

Intel Xeon 6 and Granite Rapids validation. Intel public demos show up-to-33% system performance gain when MRDIMMs replace RDIMMs on the same CPU. AMD’s Turin roadmap points to similar need as core counts rise beyond 192.

Server OEM launch windows. Major vendors (Dell, Lenovo, HPE) schedule next-gen 2H 2025 platforms; memory qualification has to finish this year, pushing MRDIMM volumes into the next two-to-three quarters.

4. Ecosystem Lock-in for Interface-Chip Makers

Each MRDIMM uses a 1 × MRCD + 10 × MDB buffer architecture. That lifts content per DIMM roughly 4-5× over an RDIMM in dollar terms, creating an immediate revenue spike for suppliers such as Rambus, Montage, Renesas and Netlist35.

Buffers are already in engineering or pilot production, so chipmakers are incentivised to push MRDIMM adoption to amortize new mask sets quickly.

5. Macro Tailwinds: AI Infrastructure CapEx

Cloud providers accelerating GenAI inference clusters need more memory bandwidth per dollar than GPU-only nodes can deliver; CPU-centric inference farms (e.g., AWS Graviton-based) see immediate benefit.

Industry analysts label MRDIMM/MCRDIMM the “new sought-afters” for server memory in 2025 as hyperscale demand collides with the DDR5 bandwidth wall7.

6. Time-Sensitive Factors Driving Next-Quarter Urgency

Qualification cycles. Server vendors freeze memory specs 6–9 months before launch; that window is now for 1H-2025 platforms.

Supply-chain allocation. Buffer-chip foundry slots for 2025 are being booked; OEM purchase orders in the next two quarters will lock volume availability.

First-mover TCO advantage. Early adopters can advertise higher core-to-memory bandwidth ratios and win AI cloud business before competitors refresh.

BOTTOM LINE:

MRDIMM is the fastest, lowest-disruption way to double the bandwidth with the same channel. Think about if you introduce another floor. The square footage of the of the foundation is still the same, but square footage of the living area just doubled.

The next few quarters are the critical window when OEM qualifications, hyperscale purchase orders and buffer-chip ramp all converge, making MRDIMM a central storyline in server BOMs and semiconductor revenues through 2025.

Lastly, if you are still looking for more conviction on MRDIMM, check out this article. Very well explained

Ok, so lets dive in the most undervalued pure play stock exposed to MRDIMM’s.

The riskiest bet on MRDIMM is Netlist (NLST) (175M, but fully diluted with warrants at $270M Market cap)

NLST price currently at $0.62. Outstanding shares with future dilution at 450M

THESIS SUMMARY

Key patents cover HBM and DDR5 memory—critical for AI servers.

HBM market exploding (SK hynix: $0→$25B in 5yrs)

MRDIMMs to capture 10-20% server market by 2027

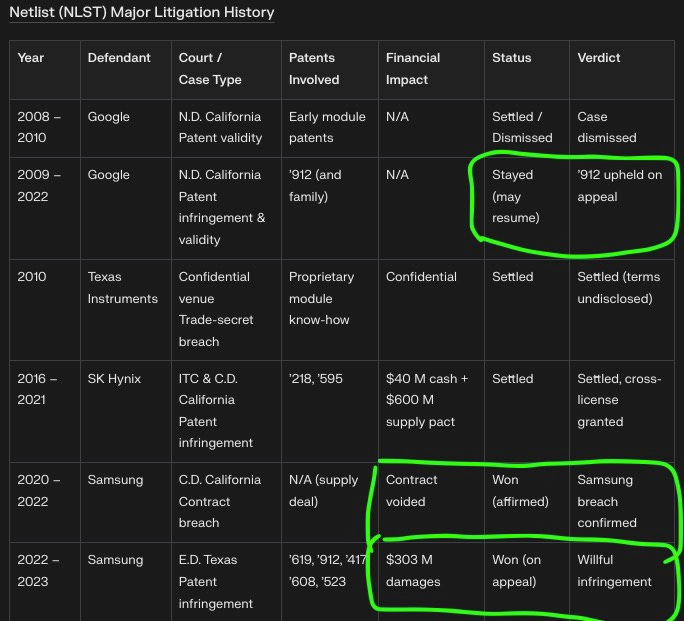

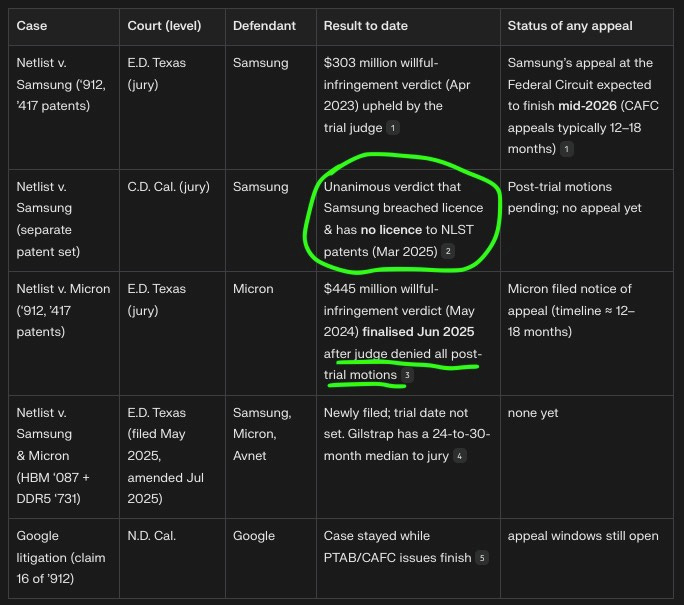

Won $445M from Micron, $303M + $118M from Samsung in patent cases.And thats only for the past damage not forward future.

Samsung lost 3x already; appeals likely to fail.

Federal Circuit Court rulings in 2026 could finalize billion-dollar IP wins.

CEO and Founder of 25 years, made insider purchase on 24 June 2025 for $3M for 4.3M shares. He has never made an insider purchase in the last 25 years except on 24 June 2025. I think it’s a sizable portion of his net worth.

HBM market is exploding: SK hynix alone projects $25B in sales—Netlist’s patents ride this wave.

Netlist, a California-based memory designer, licenses proprietary DRAM and hybrid DRAM-NAND modules whose patented load-reduction, rank-multiplication and distributed-buffering techniques boost bandwidth and capacity per DIMM.

Netlist has 130+ US and Foreign patents already in place focused on DRAM’s.

Those same inventions underpin the new DDR5 Multiplexed Rank DIMM (MRDIMM) standard, so the company’s roadmap and revenue model are now focused almost entirely on MRDIMM technology—making Netlist a pure play on this next-generation memory format.

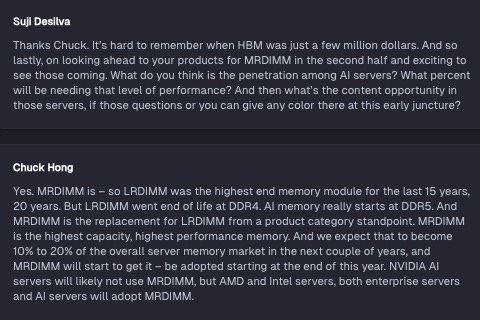

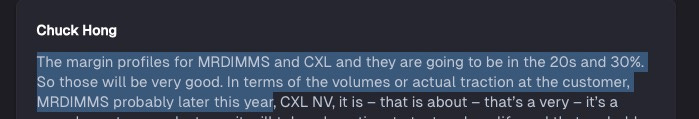

CEO Chuck Hong explains why MRDIMM’s are important.

The reason that the stock is so depressed is because it has many litigations ongoing. But it has won many cases against Samsung already and it is very likely that the cases will close

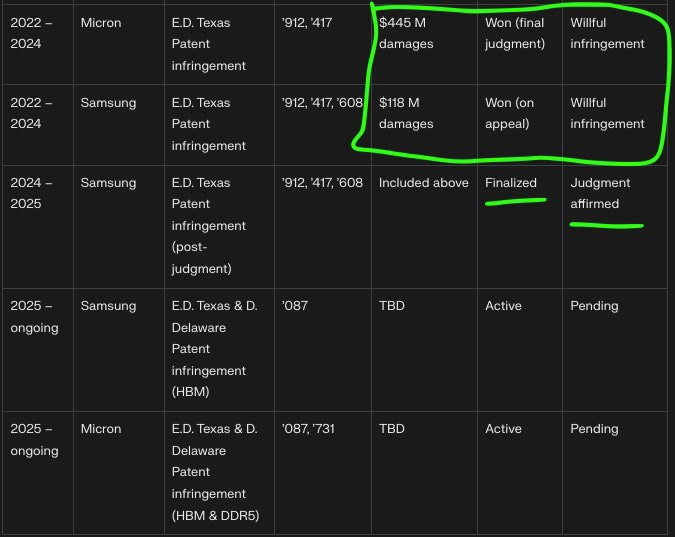

Here is also another map of all the ongoing litigations and it’s current status. Stockd is the analyst who keeps it refreshed.

We are Less than a year away from a CAFC decision regarding the '463 litigation and the 5 companion patents for DDR4, DDR5, and HBM.

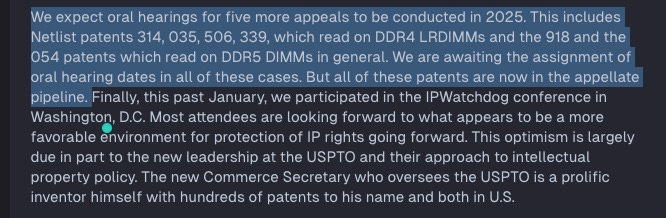

CEO Hong said this on March 27th 2025

Important to note that CAFC is not looking to correct logical errors in either sides arguments; just addressing any procedural errors that would have influenced the Final Written Decision (FWD).

CAFC can do one of three things; uphold the PTAB FWD, vacate the PTAB FWD, or remand back to the PTAB for another go.

CAFC Patent Validity decisions within an average of 18-24 months (consolidation with applicable trial appeals could extend the timeframe)

CAFC found in favor of NLST for the '035 and '523 patents. Here is the current status of all the litigations.

* 314 Filed 12/23 - earlier than 12/25 (filed by Micron) - anticipate July 2025 Oral Hearing

* 506 Filed 02/24 - earlier than 02/26 - Oral Hearing should be in the Fall 2025

* 339 Filed 04/24 - earlier than 04/26

* 054 Filed 05/24 - earlier than 05/26

* 918 Filed 05/24 - earlier than 05/26

* 060 Filed 08/24 - earlier than 08/26

* 160 Filed 08/24 - earlier than 08/26

* 912 Filed 09/24 - earlier than 09/26

* 215 Filed 12/10/2024 - earlier than 12/2026

* 417 Filed 12/10/2024 - earlier than 12/2026*608 Filed 1-23-2025 - earlier than 1/2027 (if Samsung's appeal request is accepted)

'608 IPR FWD found all challenged claims patentable so now we await word that Samsung will appeal the IPR FWD

The '463 litigation and the 5 companion patents are slowly getting closer to resolution (in 2026).

CAFC has requested schedule conflicts for the next 7 months (!) regarding Oral arguments for all 5 patents and the '463 litigation.

NLST would be happy to have Oral arguments this Summer. Samsung will claim conflicts for all of 2025 and shoot for January 2026.

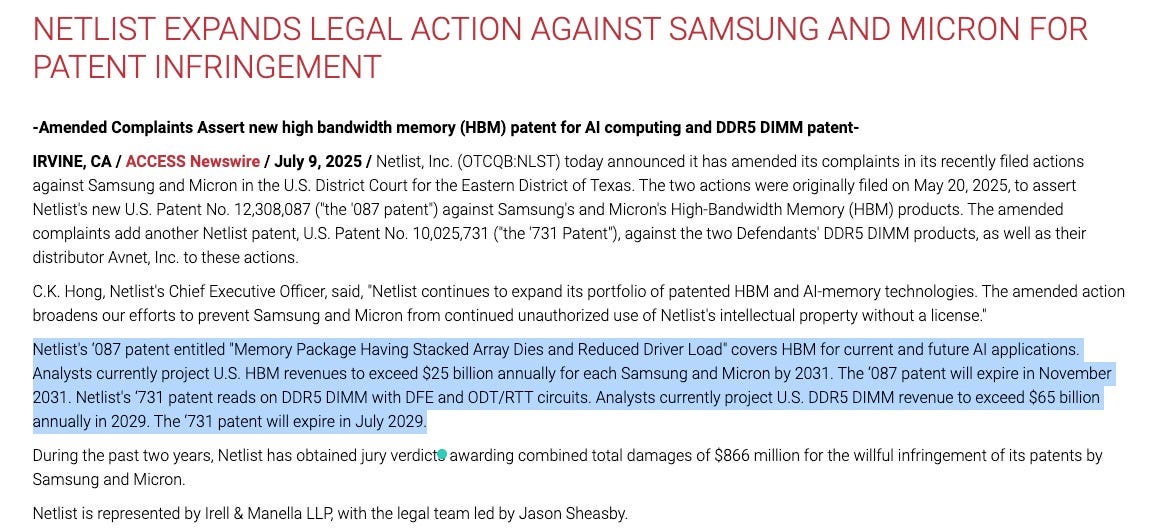

There is a new patent filed for infringement (087). You can have a look at the official statement here

NLST did not go after Distributors of the big three (i.e. Avnet, Arrow, Future, Nu-Horizons, etc etc) in the initial litigation since a few of them were NLST customers. Now that they are not NLST customers they are beeing sued as well.

On reddit, there is amazing Research and DD on the topic here. If you got this far, do check this out to build further conviction.

1. Reddit Here is pretty dense knowledge about the NLST cases. .

Stockd The analyst who made the above litigation map and is incredibly resourceful in following up on all the news and court documents.

https://stocktwits.com/100lbstriper This guy’s been pumping the original documents from the court rulings

Now lets look at the financial health and certain metrics of the company.

There are 3 take aways from the financial snapshot at this point.

a. There is real revenue, yes it is not growing right now. But it is real

b. Cash is precariously low. Latest SEC document for Q1 2025 puts it at 26M. And then they raised some more cash on 24 June 2025 for 11.3M. So the best estimate is that they are holding around 30M of cash.

c. There is dilution and if you buying in, you must prepare and accept further dilution. The company is trying to stay afloat while the stalling techniques by the big company is in full play.

Track record so far

During the past two years, Netlist has obtained jury verdicts awarding combined total damages of $866 million for the willful infringement of its patents by Samsung and Micron.

Why recent momentum favors Netlist

Clean win-loss record in juries. Netlist is 3-0 in jury trials since 2023 and each verdict was for willful infringement or breach, showing that lay fact-finders find the patents valid and infringed

Patent Office outcomes. Key PTAB challenges against the ‘912 and ‘417 patents failed, so Samsung and Micron must now rely chiefly on the Federal Circuit, where the burden is higher because validity is reviewed for substantial-evidence error and not the whole reprocess.

Clock for damages keeps running. The $445 m Micron award only covered 2021-2024 sales; infringement that continues through appeal adds leverage for settlement.

Same patents, second bite. The new HBM/DDR5 lawsuit asserts fresh patents (087) that have not yet been challenged; defendants now face both old and new liabilities.

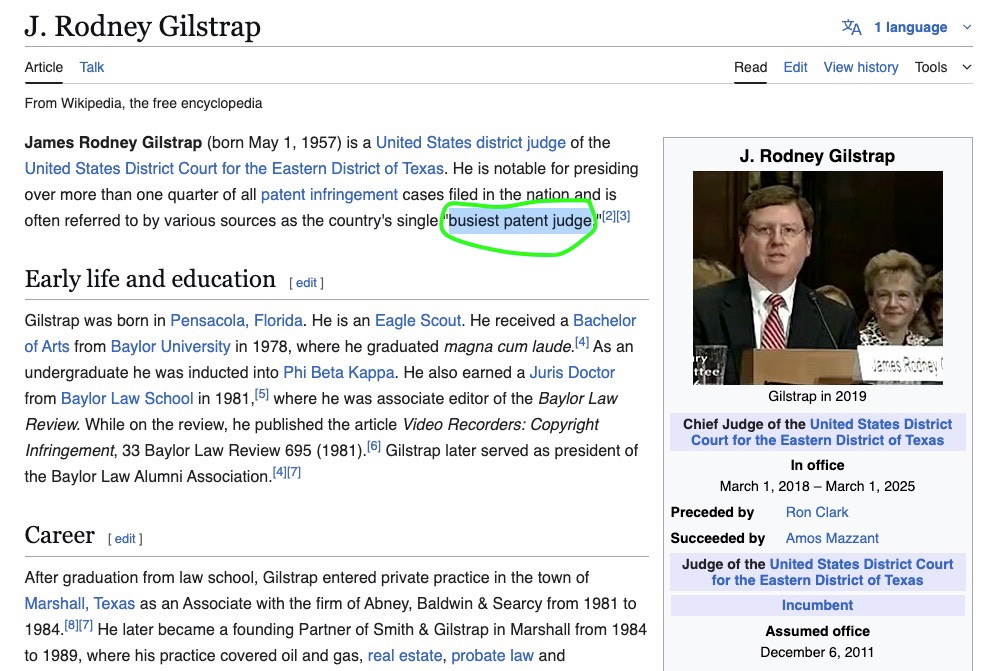

Judicial endorsement. In Micron’s case, Judge Gilstrap denied all post-trial motions, calling the evidence “substantial,” which strengthens the verdict on appeal

Judge Gilstrap: Ruled in favour of NLST in the case against MU in Texas

He handles 25% of US patent cases – Wields unmatched influence in tech litigation.

1,600+ cases since 2011 – Top judge by volume and experience.

⚖️ Plaintiff-friendly Texas court – 90% civil caseload, magnet for patent disputes.40-50 jury trials – Overturned just one verdict in his career.

NLST ruling stands strong – High odds of surviving appeals or retrials.

Key takeaway: Gilstrap’s track record and venue dominance make his rulings critical for tech patent battles.

Timelines you can realistically expect

In my opinion, Early 2026 is likely for final resolution.

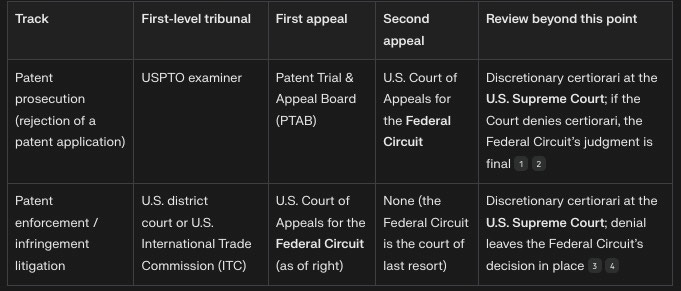

Patent disputes can move through two separate tracks, each with its own “ceiling” for further review.

PTAB is preliminary and CAFC is final. We are on the final laps for this litigation case with CAFC decisions.

Federal Circuit (CAFC) appeals of the 2023 Samsung and 2024 Micron verdicts

Notice of appeal already filed (Micron) or imminent (Samsung).

Median CAFC time from docketing to opinion in patent cases is 12–18 months; recent Netlist analyst notes assume mid-2026 resolution1.

If the CAFC affirms (or only trims damages), Samsung and Micron would owe the judgments plus pre- and post-judgment interest—roughly late-2026 payout unless they petition the Supreme Court.

New Eastern-Texas HBM/DDR5 case

Judge Gilstrap’s median to trial is ~24–30 months. That places jury selection around Q3-2027, assuming no stay.

Parallel PTAB reviews (if instituted) add 12–15 months but Gilstrap rarely stays cases for IPR once a scheduling order is in place.

Google case in Northern-California

Stayed pending PTAB/CAFC resolution on claim 16. If the CAFC finishes those reviews by mid-2026, the stay should lift, and trial could follow in 2027 under Judge Seeborg’s docket pace.

Will everything reach higher federal courts?

All current venues (E.D. Tex., C.D. Cal., N.D. Cal.) are already U.S. federal district courts. The next level is the U.S. Court of Appeals for the Federal Circuit, which has exclusive jurisdiction over patent appeals. The Supreme Court hears fewer than 2% of CAFC patent petitions each term; unless the cases raise a broad legal question, the odds of SCOTUS review are low.

Likelihood of Netlist prevailing on appeal

Historical CAFC statistics show:

73% of jury validity findings are affirmed in full or in part.

58% of infringement findings are affirmed.

Damages are modified or vacated in ~40% of cases but rarely reversed outright.

Given that Netlist’s verdicts survived rigorous Rule 50 and Rule 59 motions before patent-savvy judges, its odds look better than the general averages. Add the defendants’ willfulness findings—which the CAFC reviews for abuse of discretion—and Netlist appears more likely than not (>50%) to keep a substantial portion of the awards.

Most importantly, CEO Hong had his reasons to buy 4.28 Mio shares for 0.70. The 8.5 Mio warrants linked to this big insider buy would be totally worthless and the spent 3 Mio $ would be lost, if Netlist does not succeed. Does anybody believe that a guy like Hong gambles with 3 Mio $ from his private pocket?

CEO Hong has NEVER bought significant amounts of NLST ever before. Here is his record. I think that it is significant that he made purchase worth 50% of his entire networth to keep NLST floating.

Bottom line

Either NLST buys yachts, or we eat ramen. No middle ground!!

Momentum: Netlist has not lost a major motion since 2023 and now has almost $900M dollars in confirmed judgments.

Timeframe to money: Earliest cash event is CAFC affirmance—late 2026 is realistic. Faster resolution could come via settlement, but history suggests Samsung and Micron litigate till the very end.

Risk factors: CAFC could reduce damages, invalidate some claims, or order new trials; PTAB could still institute new reviews on the freshly asserted patents.Ntelist might run out of money and dilute further.

Overall assessment: Based on the undefeated trial record, failed PTAB attacks, and deference given to jury findings, the litigation tilts in Netlist’s favour, although investors must plan for a multi-year timeline and the usual appellate volatility.

Insider buy: Long term CEO and founder of NLST, bought 3M worth of stocks in June for 0.7 cents. If one buys today, you will be getting it cheaper than him. He has been the CEO for 25 years and has done litigation for more than 16 years of his time there.

This makes me wanna swim in the same pond as him.And Lastly, NLST is planning to put MRDIMM’s of their own into production. They expect high margin on those. This is what the CEO Hong said on 27th March 2025

It’s all or nothing stock. If these litigation processes resolve itself, I think NLST could be a 15-30X stock. If not, then your entire investment could easily evaporate with a loss of 50-90%.

It’s a ALL OR NOTHING play

PS. AT the exact moment of publishing, an SEC filing surfaced that puts the date for annual shareholders meeting at 25 Sep 2025. On this day they want to cast a vote to allow for 50% dilution if the board deems necessary. Due to this, I would be inclined to not make all of my purchases at once. Wait a little and make smal small purchases at it might dilute further.

I am unsure why the date of 24 July is used. It is still a week ahead right now.

Anyhow, In part 2 of MRDIMM, we are going to look at much bigger companies focusing on MRDIMM. There are 2 more companies with >1B Market cap pure play focus on MRDIMM.

Right now, they are not at support levels or overlooked territory, so I will post them at the right time.

Great stuff