AI data centre architecture is preparing for 1MW+ racks and 1GW+ total power

Many small caps that still haven't moved yet and will be the beneficiaries of this trend

Everyone’s drooling over trillion-parameter models, yet the real Darth Vader is actually amperes and losses, rather than tokens. Keep jamming 12 V into 1 MW racks and you’ll melt bus-bars faster than Sam Altman can tweet “compute-constrained.”

High Voltage is the new oil—watch.

Unless we increase voltage, the copper wires in AI data halls will be as thick as Slytherin’s serpent

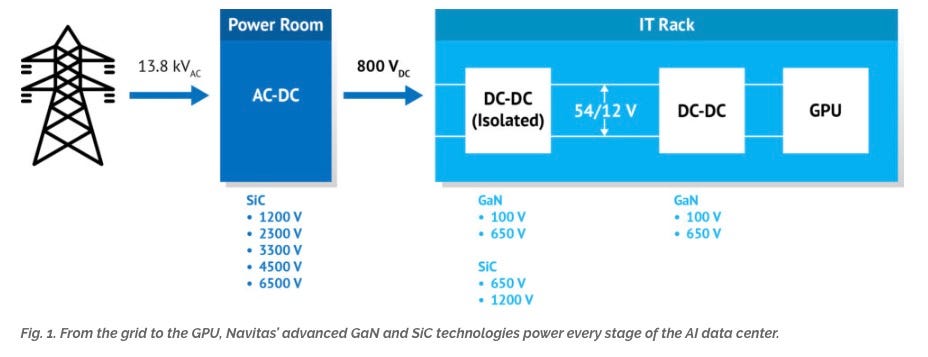

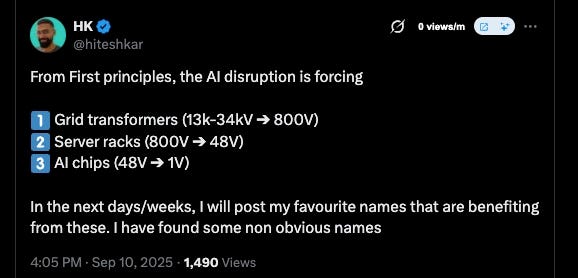

As AI models grow larger and data centers scaled up, the infrastructure that powers them faces a fundamental re-architecting. The traditional paths of voltage conversion are being pushed to their limits, forcing innovation across the entire power chain:

1️⃣ Grid transformers stepping down from 13k–34kV to 800V

2️⃣ Server racks converting 800V to 48V

3️⃣ AI chips operating little under 1V

Each stage in this cascade represents both a technical challenge and an opportunity. The AI disruption is happening deep in the physical layers of the power grid, server racks, and the silicon

The winning stack is

34 kV AC → 800 V DC → 48 V SELV → 1 V (at the die).

This is what the industry is now converging on. Let me explain from the basics

INSIGHT 0: PHYSICS NEVER BLUFFS

Electrical Power (P) = Voltage (V) × Current (I)

Power is the amount of work that can be done. Voltage is the potential difference, and current is the rate of the amount of electrons flow.

Now the best analogy to think about Voltage is the water pressure, and current as the amount of water flowing through the pipes.

Now if you want to increase power, you can increase by increasing the I or V. But the problem is losses are defined by the following

Loss = I² × R.

So ideally, you want to have current as low as possible since current has quadratic relationship with Losses.

So Double voltage → halve current → quarter the losses

Lower current also shrinks:

– Cable cross-section (copper ≈ $8/kg)

– Bus-bar mass (try lifting 2 m of 4,000 A aluminum)

– Connector bulk & labor.

Higher Voltage is basically free efficiency?

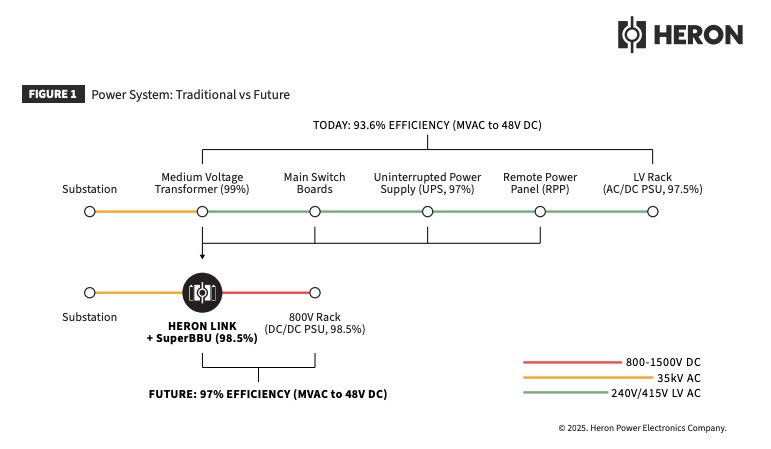

HERON the new startup from Drew Bagliono (Ex-Tesla CTO or VP, they couldnt decide his designation as well, Basically X-battery boss), explains it well in their white paper. Basically they want to delete a bunch of steps in the middle and increase the efficiency by 2-4%. Meaning if you have a 1GW data centre, you will save ±40KW. This is huge.

The fact that Drew is starting a startup to supply Solid States Transistors (SST) tells you all you know about the market shortage. There are a few incumbents who might be able to produce it like Siemens, ABB etc. and it remains to be seen if they will be able to come through.

I have a few small cap stocks to reveal who will be the beneficiaries from this trend in the following posts.

INSIGHT 1: FROM GRID TO HALL

(34 kV AC → 800 V DC)

Status-quo :

• 13-34kV utility line → 480 V AC transformer → central UPS (AC->DC->AC) → PDUs.

• Five fridge-sized boxes, ~93 % round-trip, weeks of field wiring. Meh. 93-94% with the best equipment mind you.

Upgraded path:

• Solid-state Transformers spit 800 V DC straight into the hall.

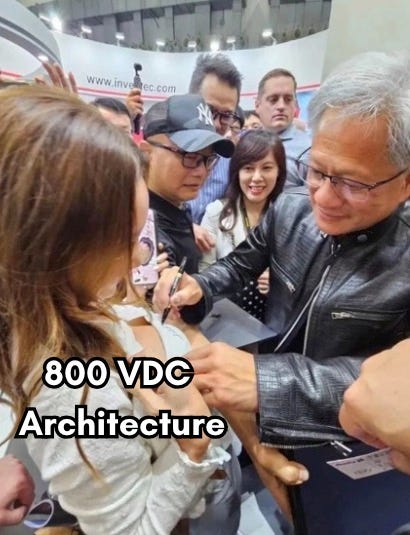

NVIDIA’s been on this 800V train now since a couple of years.

Moving to 800V DC transmission is quite a big deal, as it allows for significant efficiency improvements, large cost savings in materials needed, and much higher upper limits on per-rack and per-data center power delivery.

While the 800 VDC notion isn’t new, having the world’s largest chip designer fully embrace the standard throws substantial weight behind it

Lets face it, we all want this on our chest too. Mark of confidence

SiC MOSFETs, film caps, breakers all sweet-spot around 1 kV.

Punchline: The same copper that once fed 15 MW now pumps 30 MW. Congrats, you just doubled your data-hall without touching real estate.

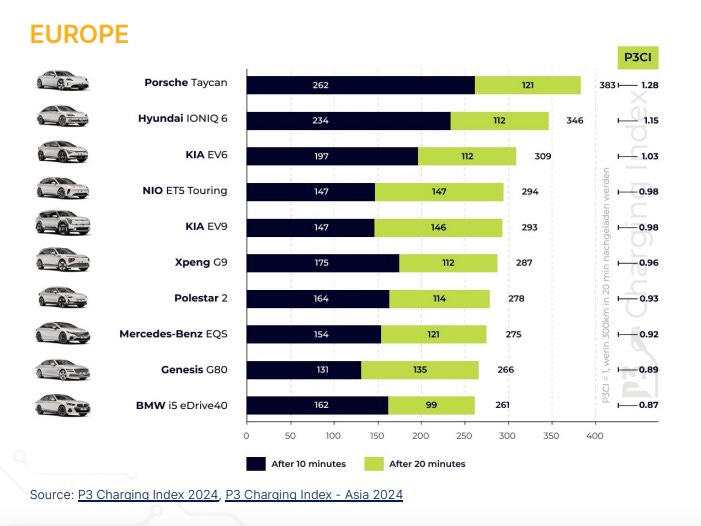

Porche was the first automaker to switch to 800V charging system. They got the fastest charging car in the Eueopean continent. 262kms in 10 mins. Naice.

INSIGHT 2: INSIDE THE RACK

(800 V → 48 V)

Okay now you have reached the rack with 800V. What happens then?

# STATUS QUO

• 400/480 V AC enters a rack level PDU/PSU.

• That PSU outputs **12 V (sometimes 24 V) DC**, typically ≤5 kW.

• Current at 12 V is huge (5 kW / 12 V = 420 A) so each rack stops at ~20 kW before copper, breakers and heat become unmanageable.

# NEW TREND

• A few high-frequency LLC or resonant converters take 800 V→48 V, yielding a rack level **DC bus-bar at 48 V SELV** (safety extra-low-voltage).

• 48 V lets the rack carry 100 kW with 2 cm-wide copper plates instead of water-cooled cables.

But Why 48 V, not 100 V?

• ≤60 V is exempt from most touch-safe regulations, avoiding arc-flash PPE inside the row.

• Commodity MOSFETs & controllers work efficiently up to 60 V.

• Server vendors (OCP “Open Rack v3”, OCP “ORV3”) already converged on 48 V blind-mate connectors.

Result: single GPU racks exceeding 150KW become practical; the rack PSU loses only ≈1 % instead of ≈3 % at 12 V, not to mention the copper and cooling it just avoided

INSIGHT 3: ON THE BOARD

(48 V → 1 V @ <1,000 A)

# STATUS QUO

• 12 V → 1 V multi-phase bucks; 600 A rivers across FR-4. Entire PCB looks like a copper heatsink. PCB’s would then look like this.

This image is very sarcastic btw. Not how you can produce PCB’s.

# NEW TREND

• Factorized 48 V → 1 V modules sit millimetres from the Ball grid array (connectors).

• Until the final inch, you’re only pushing 12 A; transient droop becomes a rounding error.

• GPUs still sip 0.9 V—physics of 5 nm CMOS didn’t change, and We just kept cramming more transistors.

Nvidia GPUs have evolved from about 400 watts on Ampere, to 700 watts on Hopper, to around 1400 watts on Blackwell, with next-generation chips expected to exceed 2000 watts.

Super-nerd stat: NVIDIA B200 (1.4 kW) needs ~1,400 A at 1 V. Try routing that at 12 V upstream. Your board traces will literally delaminate.

There is a player who could do such amperage. They got banished by NVIDIA 2 years ago. Today they got earnings, lets observe if they have any news.

Power semiconductors are usually very price elastic and quite a hard business.

Okay so let’s Summarize

• Efficiency: Nuke two conversion stages → +3-5 % site-wide. That’s 30-50 MW saved on a 1GW. Enough juice for another AI wing

• Power Density on racks going from: 20 kW/rack → 200 kW/rack. Meaning 10× compute per square foot.

• Copper savings: Current halves each hop → conductor mass ⨉ 0.25; 2025 LME copper is $9,000/tonne—do the math

• Cooling headroom: Less PSU heat → more delta-T for the H100 heat sinks

• Batteries: 800 V matches packs and skip the double conversion, slot in LFP cabinets, and you can go sip colada all day.

Here again, I have a misunderstood small cap that will benefit from this trend. Many thought leaders left it for dead but it might see a quick 2X in market cap.

TL;DR

Voltage is the cheat code. Crank it up early and keep it up for as long as possible. Then step it down ONLY inches from silicon, and you can unlock power dense, greener, cheaper AI factories.

Anything else is just setting money—and copper—on fire.

Finally got this post out. Will share the beneficiaries of this trends in the next post.

The unloved small cap is $VICR. Theey killed it during earnings. Sorry guys couldnt send my research before they blew up now 45% already.

https://x.com/hiteshkar/status/1980753990502564327

Very insightful post, thank you very much for expaining this with such detail :)